Proofpoint, a leading cybersecurity and compliance company has released new findings uncovering how cybercriminals are exploiting AI-powered website builders to launch large-scale phishing and fraud campaigns.

We are often asked about the impact of AI on the threat landscape. While large language model (LLM) generated emails or scripts have had little impact, some AI tools are lowering the barrier for digital crime. Services that create websites in minutes with AI are being abused by threat actors.

Cybercriminals are increasingly using an AI-generated website builder called Lovable to create and host credential phishing, malware, and fraud websites. Proofpoint observed campaigns leveraging Lovable services to distribute multifactor authentication (MFA) phishing kits like Tycoon, malware such as cryptocurrency wallet drainers, and phishing kits targeting credit card and personal information.

Lovable is a user-friendly website builder that creates designs using natural language prompts and hosts them on lovable[.]app. While a useful tool for people with limited web design knowledge, Lovable is being exploited by cybercriminals to create websites distributed via phishing attacks. In April 2025, Proofpoint researchers confirmed that they could easily create fake websites impersonating major enterprises without encountering any guardrails.

Campaign details

Proofpoint has observed hundreds of thousands of Lovable URLs detected as threats each month in email data since February 2025, with increasing frequency each month.

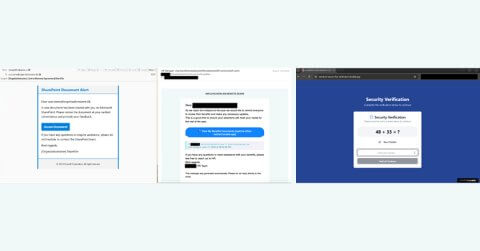

Tycoon Phishing Campaigns: In February 2025, Proofpoint identified a campaign that impacted over 5,000 organizations. Messages contained lovable[.]app URLs that directed recipients to a landing presenting a math CAPTCHA which, if solved, redirected to a counterfeit Microsoft authentication page.

These sites were designed to harvest user credentials, multifactor authentication (MFA) tokens, and session cookies. Additional campaigns in June 2025 impersonated HR departments with emails about employee benefits, following a similar attack chain.

Payment and Data Theft: In June 2025, Proofpoint detected a campaign impersonating UPS. with nearly 3,500 phishing emails. Victims were directed to AI-generated UPS lookalike sites hosted on Lovable, which collected personal and payment information and posted stolen details to Telegram. Because Lovable allows free templates to be reused, even legitimate projects can be cloned and weaponized with a simple prompt. Proofpoint has also observed sites impersonating banks to steal credentials, often using Lovable redirects and CAPTCHAs.

Crypto Wallet Drainer

Proofpoint has observed campaigns targeting cryptocurrency platforms. In June, nearly 10,000 emails impersonated the DeFi platform Aave. Victims were redirected to Lovable-created websites mimicking Aave, prompting them to connect cryptocurrency wallets. The likely goal was to drain assets from connected wallets.

Further investigation

Initially Proofpoint observed the Lovable pages being used as redirectors to malicious sites. Further research revealed that credit card harvesters built on Lovable sent stolen data directly to Telegram. Using just one or two prompts, Proofpoint researchers were able to create fully functional phishing sites with deceptive language automatically suggested by the tool. Unlike responsible AI providers that block misuse, Lovable had no such safeguards.

Conclusion

Some AI tools can significantly lower the barrier for cybercriminals, especially those focused on creating social engineering content to appeal to the end user. Historically, creating phishing websites required time and technical skill. Now, automatic web creation tools allow attackers to focus on scaling their attacks and refining social engineering tactics.

Creators of such tools should implement safeguards to prevent exploitation. While legitimate users benefit from these apps, organisations should consider allow-listing policies around frequently abused platforms.

Image Credit: Proofpoint