By Saeed Ahmad, Managing Director, Middle East and North Africa, Callsign

With customer experience a key differentiator in today’s competitive online marketplace, businesses constantly strive to make the user journey simple, smooth, and safe. As more and more customers increasingly use online mediums for shopping and transactions, chatbots have become more widespread.

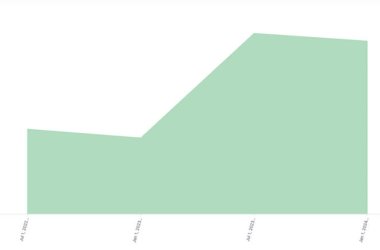

Many businesses in the region have installed chatbots on their customer-facing touchpoints and across various industries such as banking, online retail, hospitality, and healthcare. According to research, the conversational computing platforms market in the MEA region, which includes chatbot technologies, is growing with a CAGR of 28.9% in the forecast period of 2020 to 2027.

Chatbots have evolved significantly – and rapidly – in the last few years. They’re becoming increasingly ubiquitous, intelligent, and useful. They’re also becoming something of a risk.

Chatbots have progressed to the point where the end-user may not even realise they’re not interacting with a human. Thanks to this level of sophistication, people are more at ease than ever before interacting with chatbots and AIs.

Unfortunately, cybercriminals and fraudsters have noticed, and chatbots are almost certain to become one of their new attack vectors in the coming months.

Understanding the risks

Bad actors are aware of the proliferation of advanced chatbots, having a vast array of tools and skills at their disposal. With their own advanced skill sets encompassing everything from script attacks to information and social engineering, it’s no surprise that chatbots are already identified attack vectors for bad actors looking to manipulate them to gain access to customers’ data.

Moreover, on their own, chatbots cannot differentiate between a genuine customer or an imposter – even the most advanced chatbot won’t be able to see through a concerted fraud attempt backed up by seemingly authentic data.

The adage that data is an organisation’s most valuable asset is often repeated; however, a company’s importance on fraud prevention may vary depending on other factors such as compliance, cost, and customer service. The latter is particularly applicable to businesses wary of adding friction to the customer experience by implementing additional security measures. As a result, companies accept a certain level of fraud as a cost of keeping customers happy and engaged.

Attacks on chatbots, however, have the potential to affect all these areas. Chatbots can improve the customer experience while also lowering costs by reducing the reliance on contact centre staff. Nevertheless, even if a company is insured against the financial consequences of fraud, it must consider the reactions of its customers, as well as the loss of trust and reputational damage that will inevitably follow – not to mention the possibility of steep fines for non-compliance violations.

It’s here that the less visible damage is done. The business or the customers may get their money back; whether they remain customers is a different story.

Fighting the Bot War

Fortunately, the lines of defence that will protect today’s organisations from tomorrow’s chatbot attacks exist today.

Chatbots, on their own, are not able to differentiate between a genuine customer or an imposter. However, advances in behavioural biometric technology mean that chatbots will soon differentiate between genuine users and bad actors during digital conversation. Behavioural biometrics allow a user to be identified by what they type and how they type it.

Ultimately, organisations using chatbots should use robust authentication methods to secure chatbot interactions and detect any fraudulent activity, to protect their customer’s privacy and deliver seamless experiences.

Although chatbots have yet to pass the Turing test, the human element is already present. AI and machine learning advancements allow chatbots to remember, learn, and provide a more personalised experience.

This is a good thing, if improvements in chatbot humanisation match the security improvements. Chatbots are here to stay, and they’ll only get more intelligent, but so will the criminals. And if rules-based authentication isn’t fit for purpose today – and it isn’t – then it isn’t fit for a future where chatbots play a significant role.

Positive identification is already playing an essential role in this arena, and organisations that want to succeed in the future should start thinking about it now. The bot war may be in its early stages, but with bad actors and fraudsters routinely refusing to give ground, there’s no room for complacency.